July edit: if you just want a windows Python deployment that version-matches Maya 2017, here. If you have other versions, read on for instructions!

When it comes to Maya python development, setting up a comfortable and reliable working environment felt like something of a rite of passage. For some, it's basic and prescribed - made to ensure consistency within a team. Sometimes it's free and gradually evolves to suit your unique tastes and needs. But whatever the origin, tech artists love showing off their dev environments. And hopefully they address one of the long-term dangers of python slanging:

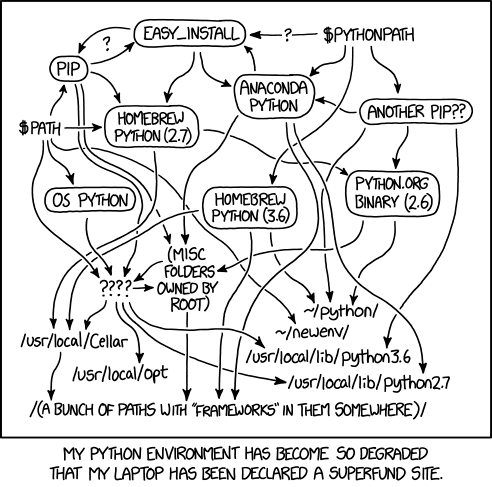

Python environment confusion and corruption has become the stuff of legends. Credit xkcd.

Despite the memery, I think it's worth remembering that the ability to have lots of python environments and seemingly duplicate libraries on a single machine is a very intentional feature. When doing python development, I need an explicit contract between my project and the libraries/modules it depends on. For example, say I have some Maya UV tools which I built using numpy. Then, a year later, I want to do some unrelated data visualization so I pip install matplotlib. Numpy is one of matplotlib's dependencies, so the setup will auto-update numpy to the version which matplotlib says it needs. The problem is, if numpy changed its interface or I/O at all in the past year, then my UV tools could now be broken. That's a lot of debugging for a toolset that has been working perfectly for a year!

How can this be avoided? If only my UV tools had their own discrete python environment then the numpy modules which they needed would be unaffected by the matplotlib install. A virtual environment is a simple way to enforce that contract and ensure that its dependencies are always what my project expects.

I'm sure this must make sense on the level of the main application loop. But, just... what??

A good write-up (with a Maya dev perspective) can be found at Siew Yi Liang's blog. If you need examples of the problems that can occur without virtualenvs, give it a read. Sometimes I feel like I'm missing out on good horror stories by following the hard-won advice of more experienced developers. But then I remember that I work with Maya (and, heck, software in general), which means there is never a shortage of weird "features" to ponder. For example!

But back to virtualenv. Let's make our goal explicit: we want a python virtual environment which is capable of loading Maya's site-packages (including running Maya standalone), but also of pip-ing new modules (eg, numpy) which are compatible with Maya's python interpreter. The links above cover the basics of virtualenv setup pretty thoroughly, so I won't waste time rehashing that. What I'd like to get into are the unique challenges of using this approach with Maya-python development.

If anyone has success virtualizing mayapy itself, please leave a comment - but I tried several things and it never worked for me.

The Challenges

The ideal solution, of course, would be to simply virtualize Maya's own python environment. But mayapy.exe seems to not want to be virtualized. You didn't think it would be that easy, did you?! This is Maya country!

The next easy thing to try would be virtualizing a basic python installation and using site to make Maya's packages available. But you may be able to predict why this won't work:

"ImportError: DLL load failed: %1 is not a valid Win32 application"

Essentially, we have a version mismatch. Specifically, it's complaining about the fact that my base Python install is 32-bit but all of Maya's libraries are 64-bit - but this isn't the only mismatch. Executing sys.version (or just reading the header in a fresh interpreter) shows us the following:

# python.exe >>> sys.version '2.7.14 (v2.7.14:xxxx, *timestamp*) [MSC v.1500 32 bit (Intel)]'

# mayapy.exe >>> sys.version '2.7.11 (default, *timestamp*) [MSC v.1700 64 bit (AMD64)]'

There are three things that must match: python version, bit version, and compiler version. The exact numbers vary based on your setup, so for the rest of this article I will match to Maya 2017 on Windows - MSC v.1700, a.k.a. toolkit v110, a.a.k.a. Visual Studio 2012. Matching the python and bit versions would be easy - there are official python releases for that. But unless the compiler used for your version of Maya already matches that of your python environment (lucky you!), we're going to have to compile python ourselves from source code. Go ahead and download and unzip your required version - 2.7.11 in my case.

The Build

If you're thinking "hold on, this is more than I signed up for,"... I hear you. But I'll guide you through it for Windows (sorry Unix, but it's likely easier for ya'll anyway - Windows is the ginger stepchild as far as python is concerned). We only need the right version of Visual Studio (I'll be using 2012) - noted in the dev docs for each Maya version.

The /PCBuild folder is where the VS solution lives, so open a console here. Essentially, we have to build pcbuild.proj for a x64 platform, using the v110 toolkit (again, replace this number with whatever is relevant to your copy of Maya). There is a build.bat script included - give it a shot, but don't expect much:

One of many problems I had with the bundled build.bat. If nothing else, running it shows that it's just a wrapper for msbuild.

Well, that's fine, we only need to execute two commands anyway: one to find msbuild and one to call it.

C:\...\Python-2.7.11\PCBuild> "%VS110COMNTOOLS%..\..\VC\vcvarsall.bat"

C:\...\Python-2.7.11\PCBuild> msbuild "pcbuild.proj" /t:Build /p:Configuration=Release /p:Platform=x64 /p:IncludeExternals=false /p:IncludeTkinter=false /p:IncludeBsddb=false /p:IncludeSsl=false /p:PlatformToolset=v110

The %VS110COMNTOOLS% in the first command refers to the v110 toolkit - so, again, if your version of Maya is different then you may have to change the "110" to your toolkit version. It's just a machine-agnostic way to find your Visual Studio directory, so that vcvarsall.bat can add msbuild to the terminal session. Once msbuild finishes, you should have a new directory /amd64 in PCBuild. In it is everything we need for a bare-bones python install! But let's spend a moment organizing it like a normal python distribution.

Create a new directory (e.g., Python1700) wherever you want, with subfolders /DLLs, /libs, and /Scripts. From /amd64, copy the .exe-s and python27.dll to the fresh base directory, as well as all .pyd files to /DLLs and all .lib and .exp files to /libs. Then, from one above PCBuild, copy the /Include and /Lib folders to Python1700. The only problem is we don't have SSL, which is required for pip... but Maya does! Go to your Maya python directory (C:/Program Files/Autodesk/Maya2017/Python) and copy /DLLs to your newly made distribution (overwrite, or not. The duplicates are the same). Now you're ready to get pip. And it's done!

Outcome and Integration

Good snakes don't coil into a nested nightmare like earbuds.

This reveals the deeper truth that python environment wrangling is just digital cable management. Where are my zip-ties?

We now have a python environment which version-matches Maya, can be virtualized whenever, and can use all of the tools - like pip - that we would expect from a usual python deployment. However, at this point we have to address a practical and suddenly evident criticism of using different virtualenvs for Maya tool development - we can't force Maya to use them natively. We can batch Maya in these venvs, but that doesn't help a tool which is made for artists. We still need to integrate with a normal Maya session.

We can use site.customize or sys.path to point to a venv's unique site-packages, but this brings its own challenge: python doesn't support importing multiple versions of the same module. In a single session, the first import statement causes a python module object to be created and cached in sys.modules; importing the same module again (even in packages and sub-packages) just accesses the cached module object - even if the cached module's directory has been replaced in sys.path. See:

# in file printNumpy.py:

import numpy

print(numpy)

# in python shell

>>> import sys

>>> import numpy

>>> numpy

<module 'numpy' from 'C:\Python27\lib\site-packages\numpy\__init__.pyc'>

>>> sys.path.remove("C:\Python27\lib\site-packages")

>>> sys.path.append("***\venv\Lib\site-packages")

>>> execfile("***\printNumpy.py")

<module 'numpy' from 'C:\Python27\lib\site-packages\numpy\__init__.pyc'>

So if I have toolsets dependent on two different virtualenvs, doesn't that limit how they can be used? What if I want to use these sets of tools concurrently? Maya's python interpreter can't behave like two different environments in the same session. Or can it?

import sys

import inspect

from os.path import dirname

"""

"Reset" a python session by removing entries from sys.modules.

NOTE: ACTIVE REFERENCES TO MODULES STILL EXIST AND WORK.

This script is more for cleaning the slate for encapsulated packages

(which may have specific dependency requirements) to run without conflicts.

Credit to Nicholas Rodgers.

"""

# I'm going to define this little function to make this cleaner

# It's going to have a flag to let you specify the userPath you want to clear out

# But otherwise I'd going to assume that it's the userPath you're running the script from (__file__)

def resetSessionForScript(userPath=None):

if userPath is None:

userPath = dirname(__file__)

# Convert this to lower just for a clean comparison later

userPath = userPath.lower()

toDelete = []

# Iterate over all the modules that are currently loaded

for key, module in sys.modules.iteritems():

# There's a few modules that are going to complain if you try to query them

# so I've popped this into a try/except to keep it safe

try:

# Use the "inspect" library to get the moduleFilePath that the current module was loaded from

moduleFilePath = inspect.getfile(module).lower()

# Don't try and remove the startup script, that will break everything

if moduleFilePath == __file__.lower():

continue

# If the module's filepath contains the userPath, add it to the list of modules to delete

if moduleFilePath.startswith(userPath):

print "Removing %s" % key

toDelete.append(key)

except:

pass

# If we'd deleted the module in the loop above, it would have changed the size of the dictionary and

# broken the loop. So now we go over the list we made and delete all the modules

for module in toDelete:

del (sys.modules[module])

It's a hackish implementation of a sort of unload() function - for whole directories. With it, we can tear-down and set-up a toolset's dependencies quickly and painlessly. Switch between tools written in different virtualenvs by executing:

>>> resetSession.resetSessionForScript(tool_dir) >>> sys.path.remove(old_vnev) >>> sys.path.append(new_venv)

And away you go with the new virtualenv. For consistency with the Maya-bundled stuff, I add a sitecustomize.py to all of my Maya development venvs to ensure that all of Maya’s paths (and little quirks) are applied to the venv:

"""Initialize the virtual environment for Maya standalone development.

To make the venv more like mayapy.exe, add all Maya paths in

a machine-agnostic way.

"""

import os

import site

_m_loc = os.environ.get("MAYA_LOCATION")

_app = os.environ.get("MAYA_APP_DIR")

os.environ["PYTHONHOME"] = (_m_loc+"/Python")

os.environ["Path"] = _m_loc+"/bin;" + os.environ["Path"]

os.environ["QT_PLUGIN_PATH"] = _m_loc+"/qt-plugins"

site.addsitedir(_app+"/scripts")

site.addsitedir(_app+"/2017/scripts")

site.addsitedir(_m_loc+"/bin/python27.zip")

site.addsitedir(_m_loc+"/Python/DLLs")

site.addsitedir(_m_loc+"/Python/lib")

site.addsitedir(_m_loc+"/Python/lib/plat-win")

site.addsitedir(_m_loc+"/Python/lib/lib-tk")

site.addsitedir(_m_loc+"/bin")

site.addsitedir(_m_loc+"/Python")

site.addsitedir(_m_loc+"/Python/Lib/site-packages")

site.addsitedir(_m_loc+"/devkit/other/pymel/extras/completion/py")

print("venv extra paths set by {0}".format(__file__))

Beyond the c libraries & Maya’s own modules, though, your packages are free to mismatch and conflict to the moon. Some modules may be unavailable to 64-bit python, and some may be available but only at non-default sources. Here's a couple to get you started:

pip install -i https://pypi.anaconda.org/carlkl/simple numpy pip install -i https://pypi.anaconda.org/carlkl/simple scipy

Whew. That wraps it up. This subject is a fairly deep rabbit hole, so I hope to have helped establish a tunnel back to the surface. Questions or problems? Let me know.